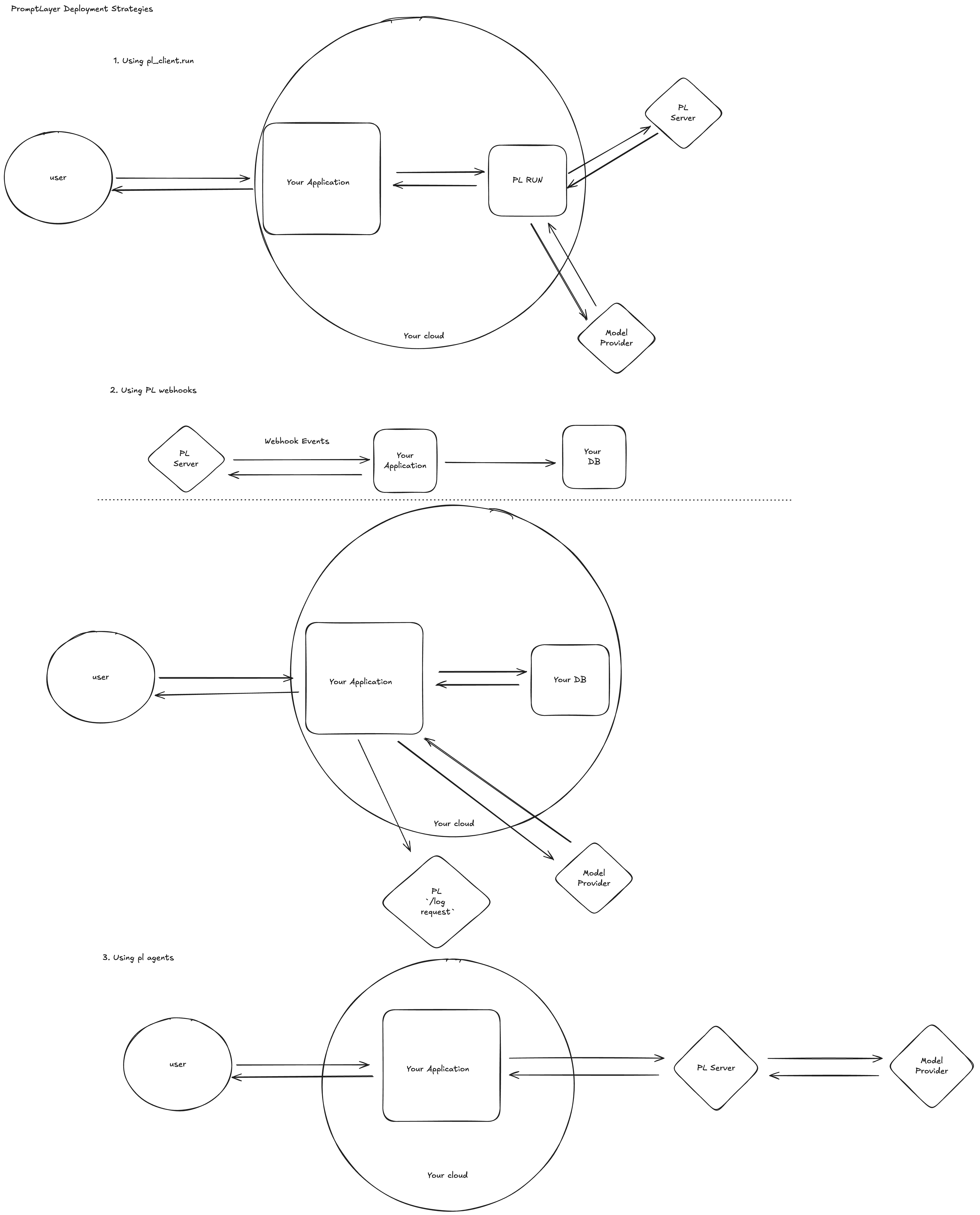

promptlayer_client.run– zero-setup SDK sugar- Webhook-driven caching – maintain local cache of prompt templates

- Managed Agents – let PromptLayer orchestrate everything server-side

Use promptlayer_client.run (quickest path)

When every millisecond of developer time counts, call promptlayer_client.run() directly from your application code.

- Fetch latest prompt – We pull the template (by version or release label) from PromptLayer.

- Execute – The SDK sends the populated prompt to OpenAI, Anthropic, Gemini, etc.

- Log – The raw request/response pair is saved back to PromptLayer.

- SDK pulls the latest prompt (or the version/label you specify).

- Your client calls the model provider (OpenAI, Anthropic, Gemini, …).

- SDK writes the log back to PromptLayer.

💡 Tip – If latency is critical, enqueue the log to a background worker and let your request return immediately.

Cache prompts with Webhooks

Eliminate the extra round‑trip by replicating prompts into your own cache or database. PromptLayer keeps that cache fresh through webhook events—no polling required.Step‑by‑step

- Subscribe to webhooks in the UI

- Maintain a local cache

- Serve traffic

Tip: Most teams push the track_to_promptlayer onto a Redis or SQS queue so as to not block on the logging of a request.Read the full guide: PromptLayer Webhooks ↗

Run fully-managed Agents

For complex workflows requiring orchestration, use PromptLayer’s managed agent infrastructure.How it works

- Define multi-step workflows in PromptLayer’s Agent Builder

- Trigger agent execution via API

- Monitor execution on PromptLayer servers

- Receive results via webhook or polling

Implementation

Which pattern should I pick?

| Requirement | promptlayer_client.run | Webhook Cache | Managed Agent |

|---|---|---|---|

| ⏱️ extreme latency reqs | ❌ | ✅ | ✅ |

| 🛠 Single LLM call | ✅ | ✅ | ➖ |

| 🌩 Complex plans / tools | ➖ | ➖ | ✅ |

| 👥 Non-eng prompt editors | ✅ | ✅ | ✅ |

| 🧰 Zero ops overhead | ✅ | ➖ | ✅ |

Further reading 📚

- Quickstart – Your first prompt

- Webhooks – Events & signature verification

- Agents – Concepts & versioning

- CI for prompts – Continuous Integration guide

✉️ Need a hand? Ping us in Discord or email hello@promptlayer.com—happy to chat architecture!